Hi Roman,

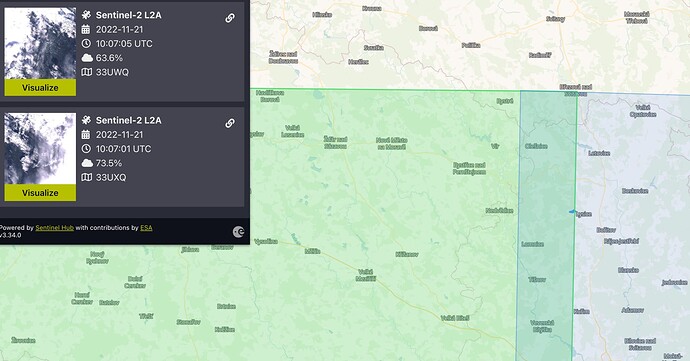

After some investigation, I don’t think that anything unexpected is occurring in your workflow. Your area of interest is intersected by two different Sentinel-2 tiles that were both acquired within seconds of each other (33UWQ & 33UXQ) and by default the Sentinel Hub API will use the most recent tile first. In cases like this, that means that pixels from tile 33UWQ (the highlighted green tile) override the pixels acquired in UXQ.

By default, the visualisation in EO Browser also uses “MostRecent” tiling, and in the linked example, you can see the border where the two Sentinel-2 tiles overlap. This is explained in more detail here in our documentation.

In addition, examining the scene classification, it is not actually Cirrus pixels that are causing the issue for you, but pixels that have been classified as Cloud Shadow.

To counter this, you can use the mosaicking functionalities within the evalscript itself. I have edited this in your script below:

//VERSION=3

function setup() {

return {

input: [{

bands: [

"SCL"

]

}],

output: {

bands: 1

},

mosaicking: "TILE"

}

}

let viz = new Identity();

function evaluatePixel(samples) {

return viz.process(isValidVeg(samples[0].SCL));

}

//true if pixel is valid vegetation, baresoil or water

function isValidVeg (samples) {

if ((samples == 4)||(samples == 5)||(samples == 6)) {

return true;

} else return false;

}

// SCL VALUES

// 0 No Data

// 1 SC_SATURATED_DEFECTIVE

// 2 SC_DARK_FEATURE_SHADOW

// 3 SC_CLOUD_SHADOW

// 4 Vegetation

// 5 Bare Soils

// 6 Water

// 7 SC_CLOUD_LOW_PROBA

// 8 SC_CLOUD_MEDIUM_PROBA

// 9 SC_CLOUD_HIGH_PROBA

// 10 SC_THIN_CIRRUS

// 11 SC_SNOW_ICE

Note, that in the setup function that the mosaicking parameter has been added to the list of inputs in the function.

Secondly, in the isValidVeg return, samples is now specifically calling the first scene (33UXQ) in the index list of scenes returned by “TILE” mosaicking. If this is changed to [1], your result would change to use pixels from the second tile that was acquired (33UWQ).

return viz.process(isValidVeg(samples[0].SCL));

To demonstrate this, we can compare the returns of the scene classification visualisation layer with samples[0] and samples[1].

Samples[0]:

Samples[1]:

You can observe that the first tile, the majority of pixels were classified as non-vegetated, whereas, in the second tile (most recent), they are all cloud shadow.

Lastly, below is the scene classification without using the “TILE” mosaicking parameter. As you can see, it is a combination of both tiles.

I hope that this explanation helps you understand how the mosaicking functions in our APIs. However, if there is anything else I can clarify then let me know!