Hello,

I want to download high size images such as 49572x21256. However, unfortunatelly senitnelhub can just provide 2500x2500 size images. Is there any way to solve this problem ?

I developed two algorithm but they do not work properly.

first algorithm:

To collect images:

import numpy as np

from shapely.geometry import MultiLineString, MultiPolygon, Polygon, shape

import cv2

from sentinelhub import (

CRS,

BBox,

BBoxSplitter,

)

import sentinelhub

from shapely import geometry

patch_x = 30

patch_y = 30

coords_ = [

[

[

37.81111,

38.724315

],

[

43.552852,

38.724315

],

[

43.552852,

36.614646

],

[

37.81111,

36.614646

],

[

37.81111,

38.724315

]

]

]

coords = geometry.Polygon([[p[0], p[1]] for p in coords_[0]])

bbox_splitter = BBoxSplitter(

[shape(coords)], CRS.WGS84, (patch_x, patch_y),

)

coords = bbox_splitter.bbox_list

coords = numpy.array(coords)

coords = numpy.reshape(coords, (patch_x,patch_y))

for x in range(patch_x):

if not os.path.isdir("test/{}".format(str(x))):

os.mkdir("test/{}".format(str(x)))

for y in range(patch_y):

coord = coords[x,y].get_geojson()['coordinates']

x_min_, y_min_ = numpy.min(numpy.array(coord), axis = 1)[0]

x_max_, y_max_ = numpy.max(numpy.array(coord), axis = 1)[0]

BBox = sentinelhub.BBox([x_min,y_min,x_max,y_max],crs = sentinelhub.CRS.WGS84)

size = sentinelhub.bbox_to_dimensions(BBox, resolution = 10)

request = sentinelhub.SentinelHubRequest(

evalscript=script,

input_data=[

sentinelhub.SentinelHubRequest.input_data(

data_collection=sentinelhub.DataCollection.SENTINEL2_L2A,

time_interval=("2022-04-01", "2022-30"),

maxcc=0.9,

)

],

image=[sentinelhub.SentinelHubRequest.output_response("default", sentinelhub.MimeType.TIFF)],

bbox=BBox,

size=size

config=config,

).get_data()[0]

cv2.imwrite("test/{}/{}.tiff".format(x,y),image)

To merge images:

target = 'test'

folders = list(range(len([i for i in os.listdir(target)])))

ref_w = 0

image = numpy.zeros((25000,55000,3),dtype = numpy.uint8)

#folders.reverse()

for folder in folders:

rows = []

folder_name = str(folder)

files = list(range(len(os.listdir(os.path.join(target, folder_name)))))

files.reverse()

print(folder)

ref_h = 0

for file in files:

file_name = str(file) + ".tiff"

tmp_image = cv2.imread(os.path.join(target,folder_name,file_name))

tmp_w = tmp_image.shape[1]

tmp_h = tmp_image.shape[0]

image[ref_h:ref_h + tmp_h, ref_w:ref_w + tmp_w,:] = tmp_image

ref_h = tmp_h + ref_h

ref_w = tmp_w + ref_w

#image = image[:,::-1,:]

print(ref_w, ref_h)

cv2.imwrite("tmp2.tiff",image)

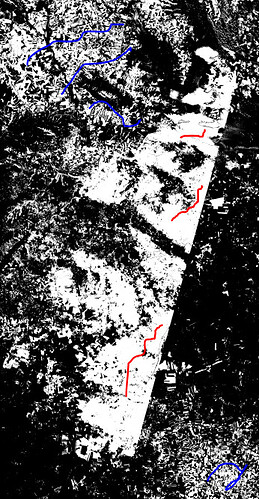

It is so useful but unfortunatelly in intersections, it has spaces. And it is curved when look at the whole image. Unfortunatelly sentinelhub forum does not let me to share second image which has curve.

Second algorithm

res = 2500

coords = [

[

[

37.81111,

38.724315

],

[

43.552852,

38.724315

],

[

43.552852,

36.614646

],

[

37.81111,

36.614646

],

[

37.81111,

38.724315

]

]

]

x_min_, y_min_ = numpy.min(numpy.array(coord), axis = 1)[0]

x_max_, y_max_ = numpy.max(numpy.array(coord), axis = 1)[0]

bbox = [x_min,y_min,x_max,y_max]

size = sentinelhub.bbox_to_dimensions(bbox, resolution = 10)

step_x = round(size[0]/res)

step_y = round(size[1]/res)

points_x = numpy.linspace(x_min,x_max,step_x)

points_y = numpy.linspace(y_min, y_max,step_y)

ratio_y = size[1]/(y_max-y_min)

ratio_x = size[0]/(x_max - x_min)

size_x = len(points_x)

size_y = len(points_y)

images = []

rows = []

for y in range(len(points_y)-1):

rows = []

for x in range(len(points_x)-1):

tmpcoords = [[[points_x[x],points_y[y]],[points_x[x],points_y[y+1]],

[points_x[x+1],points_y[y]],[points_x[x+1],points_y[y+1]]]]

x_min, y_min = numpy.min(tmpcoords, axis = 1)[0]

x_max, y_max = numpy.max(tmpcoords, axis = 1)[0]

BBox = sentinelhub.BBox([x_min,y_min,x_max,y_max],crs = sentinelhub.CRS.WGS84)

BBox = sentinelhub.BBox([x_min,y_min,x_max,y_max],crs =

sentinelhub.CRS.WGS84)

request = sentinelhub.SentinelHubRequest(

evalscript=script,

input_data=[

sentinelhub.SentinelHubRequest.input_data(

data_collection=sentinelhub.DataCollection.SENTINEL2_L2A,

time_interval=("2022-04-01", "2022-30"),

maxcc=0.9,

)

],

image=[sentinelhub.SentinelHubRequest.output_response("default", sentinelhub.MimeType.TIFF)],

bbox=bbox,

size=(2500,2500)

config=config,

).get_data()[0]

rows.append(image)

print((x,y),(x,y+1),(x+1,y),(x+1,y+1))

rows = numpy.concatenate(rows, axis = 1)

images.append(rows)

images = images[::-1]

images = numpy.concatenate(images,axis =0)

If I summarize two algorithms; in first, I take them in their original size with respect to 10 meter resolution, save them and merge them in another script. In second algorithm, I split the coordinates so that their resolution are 10 meter and sizes are 2500x2500

In first algorithm, there is some spaces between patches and the big image, merged image is curved.

In second algorithm, although I split image into patches so that their sizes are 2500x2500, unfortunatelly their size are not always 2500x2500 so its resolution changes.

What is most efficient way to take high size images except changing its original size and resolution ?

Thanks for your helps…