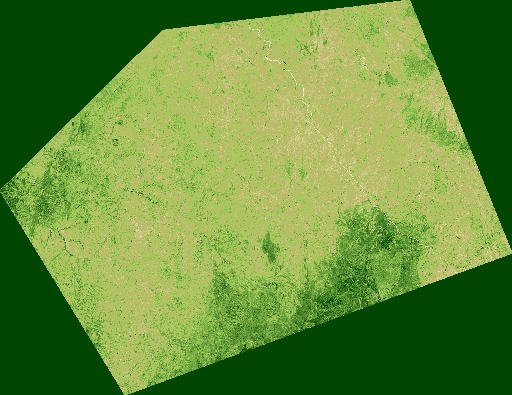

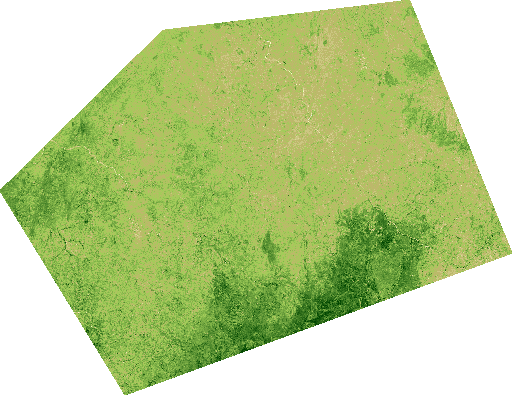

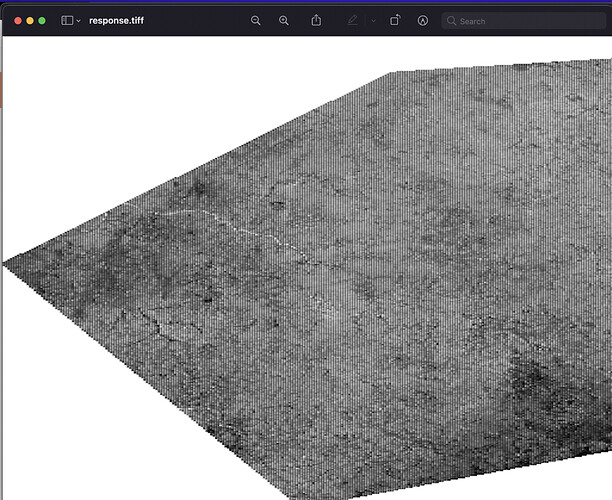

I am trying to write a service that gets the NDVI index of place passed in by a geometry and returns its image now when I write this api

response = request.get_data() returns an array and I can"t seem to get how to return the actually image using python

class vegetativeIndexView(APIView):

def get(self, request):

#Credentials

CLIENT_ID = 'client_id'

CLIENT_SECRET = 'secrete'

config = SHConfig()

if CLIENT_ID and CLIENT_SECRET:

config.sh_client_id = CLIENT_ID

config.sh_client_secret = CLIENT_SECRET

else:

config = None

evalscript = """

//VERSION=3

function setup() {

return {

input: [{

bands:["B04", "B08"],

}],

output: {

id: "default",

bands: 3,

}

};

}

function evaluatePixel(sample) {

let ndvi = (sample.B08 - sample.B04) / (sample.B08 + sample.B04)

if (ndvi<-0.5) return [0.05,0.05,0.05]

else if (ndvi<-0.2) return [0.75,0.75,0.75]

else if (ndvi<-0.1) return [0.86,0.86,0.86]

else if (ndvi<0) return [0.92,0.92,0.92]

else if (ndvi<0.025) return [1,0.98,0.8]

else if (ndvi<0.05) return [0.93,0.91,0.71]

else if (ndvi<0.075) return [0.87,0.85,0.61]

else if (ndvi<0.1) return [0.8,0.78,0.51]

else if (ndvi<0.125) return [0.74,0.72,0.42]

else if (ndvi<0.15) return [0.69,0.76,0.38]

else if (ndvi<0.175) return [0.64,0.8,0.35]

else if (ndvi<0.2) return [0.57,0.75,0.32]

else if (ndvi<0.25) return [0.5,0.7,0.28]

else if (ndvi<0.3) return [0.44,0.64,0.25]

else if (ndvi<0.35) return [0.38,0.59,0.21]

else if (ndvi<0.4) return [0.31,0.54,0.18]

else if (ndvi<0.45) return [0.25,0.49,0.14]

else if (ndvi<0.5) return [0.19,0.43,0.11]

else if (ndvi<0.55) return [0.13,0.38,0.07]

else if (ndvi<0.6) return [0.06,0.33,0.04]

else if (ndvi==0) return [1, 1, 1]

else return [0,0.27,0]

}

"""

bbox = BBox(bbox=[6.394761, 11.482716, 7.136298, 12.044693], crs=CRS.WGS84)

geometry = Geometry(geometry={"type":"Polygon","coordinates":[[[6.630955,12.001712],[6.987991,12.044693],[7.136298,11.684514],[6.576026,11.482716],[6.394761,11.773259],[6.630955,12.001712]]]}, crs=CRS.WGS84)

request = SentinelHubRequest(

data_folder = 'nidvii',

evalscript=evalscript,

input_data=[

SentinelHubRequest.input_data(

data_collection=DataCollection.SENTINEL2_L2A,

time_interval=('2021-04-22', '2021-05-22'),

)

],

responses=[

SentinelHubRequest.output_response('default', MimeType.PNG),

],

#bbox=bbox,

geometry=geometry,

size=[512, 395.946],

config=config

)

response = request.get_data(save_data=True)

return Response(response, status=status.HTTP_200_OK, content_type='image/*')