Hi,

I am trying to get NDVI and True Color layers on using Process API-leaflet. I’am student and I’ve just a basic knowledge and i am trying to develop sample process api-leaflet code. Sorry in advance.

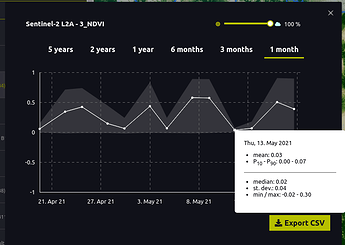

The evalscript I applied for the True Color layer is not working. How can I directly use the layers I created in the dashboard without using evalscript or can I define 2 different evalscripts in my code?

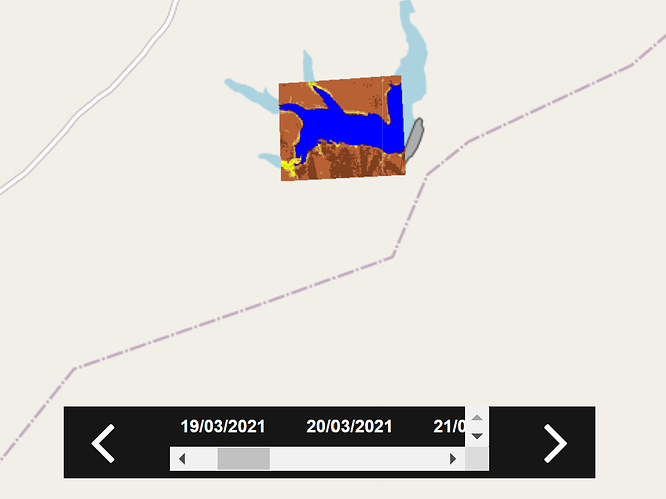

Here is my code which works to get NDVI layer correctly.

# Sentinel Hub OAuth2 + Process API Leaflet

How to use:

1) enter sentinelHubNDVI client ID and secret

(go to SH Dashboard -> User settings -> OAuth clients -> "+")

2) open this file in browser

*************************/

const CLIENT_ID = "a7b4fba9-cc9c-4fdb-9a15-MASKED";

const CLIENT_SECRET = "Xr-F_M>hTi1O+3xUOEtPcKR-MASKED";

const fromDate = "2020-07-01T00:00:00.000Z";

const toDate = "2020-09-01T00:00:00.000Z";

const dataset = "S2L1C";

const evalscript = `//VERSION=3

//This script was converted from v1 to v3 using the converter API

//NDVI EVALSCRIPT

//VERSION=3

if (dataMask == 0) return [0,0,0,0];

//ndvi

var val = (B08-B04)/(B08+B04);

if (val<-1.1) return [0,0,0,1];

else if (val<-0.2) return [0.75,0.75,0.75,1];

else if (val<-0.1) return [0.86,0.86,0.86,1];

else if (val<0) return [1,1,0.88,1];

else if (val<0.025) return [1,0.98,0.8,1];

else if (val<0.05) return [0.93,0.91,0.71,1];

else if (val<0.075) return [0.87,0.85,0.61,1];

else if (val<0.1) return [0.8,0.78,0.51,1];

else if (val<0.125) return [0.74,0.72,0.42,1];

else if (val<0.15) return [0.69,0.76,0.38,1];

else if (val<0.175) return [0.64,0.8,0.35,1];

else if (val<0.2) return [0.57,0.75,0.32,1];

else if (val<0.25) return [0.5,0.7,0.28,1];

else if (val<0.3) return [0.44,0.64,0.25,1];

else if (val<0.35) return [0.38,0.59,0.21,1];

else if (val<0.4) return [0.31,0.54,0.18,1];

else if (val<0.45) return [0.25,0.49,0.14,1];

else if (val<0.5) return [0.19,0.43,0.11,1];

else if (val<0.55) return [0.13,0.38,0.07,1];

else if (val<0.6) return [0.06,0.33,0.04,1];

else return [0,0.27,0,1];

`;

const evalscript1 = `//VERSION=3

//TRUE COLOR

//VERSION=3

let minVal = 0.0;

let maxVal = 0.4;

let viz = new HighlightCompressVisualizer(minVal, maxVal);

function evaluatePixel(samples) {

let val = [samples.B04, samples.B03, samples.B02];

val = viz.processList(val);

val.push(samples.dataMask);

return val;

}

function setup() {

return {

input: [{

bands: [

“B02”,

“B03”,

“B04”,

“dataMask”

]

}],

output: {

bands: 4

}

}

}

; // Promise which will fetch Sentinel Hub authentication token: const authTokenPromise = fetch( "https://services.sentinel-hub.com/oauth/token", { method: "post", headers: { "Content-Type": "application/x-www-form-urlencoded" }, body: grant_type=client_credentials&client_id=${encodeURIComponent(

CLIENT_ID

)}&client_secret=${encodeURIComponent(CLIENT_SECRET)}`,

}

)

.then((response) => response.json())

.then((auth) => auth[“access_token”]);

// We need to extend Leaflet's GridLayer to add support for loading images through

// Sentinel Hub Process API:

L.GridLayer.SHProcessLayer = L.GridLayer.extend({

createTile: function (coords, done) {

const tile = L.DomUtil.create("img", "leaflet-tile");

const tileSize = this.options.tileSize;

tile.width = tileSize;

tile.height = tileSize;

const nwPoint = coords.multiplyBy(tileSize);

const sePoint = nwPoint.add([tileSize, tileSize]);

const nw = L.CRS.EPSG4326.project(

this._map.unproject(nwPoint, coords.z)

);

const se = L.CRS.EPSG4326.project(

this._map.unproject(sePoint, coords.z)

);

authTokenPromise.then((authToken) => {

// Construct Process API request payload:

// https://docs.sentinel-hub.com/api/latest/reference/#tag/process

const payload = {

input: {

bounds: {

bbox: [nw.x, nw.y, se.x, se.y], // a tile's bounding box

geometry: { // remove to disable clipping

type: "Polygon",

coordinates: [

[

[

37.033538818359375,

39.246745041633794

],

[

37.03388214111328,

39.23777105285819

],

[

37.04864501953125,

39.23836935449403

],

[

37.04804420471191,

39.24754267396328

],

[

37.033538818359375,

39.246745041633794

]

]

]

},

properties: {

crs: "http://www.opengis.net/def/crs/EPSG/0/4326",

},

},

data: [

{

dataFilter: {

timeRange: {

from: fromDate,

to: toDate,

},

maxCloudCoverage: 10,

mosaickingOrder: "mostRecent",

},

processing: {},

type: dataset,

},

],

},

output: {

width: 512,

height: 512,

responses: [

{

identifier: "default",

format: {

type: "image/png",

},

},

],

},

evalscript: evalscript,

evalscript1: evalscript1,

};

// Fetch the image:

fetch("https://services.sentinel-hub.com/api/v1/process", {

method: "post",

headers: {

Authorization: "Bearer " + authToken,

"Content-Type": "application/json",

Accept: "*/*",

},

body: JSON.stringify(payload),

})

.then((response) => response.blob())

.then((blob) => {

const objectURL = URL.createObjectURL(blob);

tile.onload = () => {

URL.revokeObjectURL(objectURL);

done(null, tile);

};

tile.src = objectURL;

})

.catch((err) => done(err, null));

});

return tile;

},

});

L.gridLayer.shProcessLayer = function (opts) {

return new L.GridLayer.SHProcessLayer(opts);

};

const sentinelHubNDVI = L.gridLayer.shProcessLayer();

const sentinelHubTrueColor = L.gridLayer.shProcessLayer();

// OpenStreetMap layer:

let osm = L.tileLayer("http://{s}.tile.osm.org/{z}/{x}/{y}.png", {

attribution:

'© <a href="http://osm.org/copyright">OpenStreetMap</a> contributors',});

// configure Leaflet:

let baseMaps = {

OpenStreetMap: osm,

};

let overlayMaps = {

"NDVI": sentinelHubNDVI,

"True": sentinelHubTrueColor,

};

let map = L.map("map", {

center: [39.243276, 37.042575], // lat/lng in EPSG:4326

zoom: 15,

layers: [osm, sentinelHubNDVI],

});

L.control.layers(baseMaps, overlayMaps).addTo(map);

</script>