EVALSCRIPTURL does indeed work only with WCS.

For process API it is best practice to keep the EVALSCRIPT stored in your environment, for versioning purposes.

(and just in case it is not clear, processAPI is a strongly recommended option, rather than WCS)

The problem in your case is that you have several outputs defined in the request (“bands”, “bool_mask”, “userdata” - check the “identifiers”), whereas EVALSCRIPT does not specify, which output is what, therefore you get the error “Output bands requested but missing…” (perhaps best to avoid term “bands” for identifiers as it gets confusing).

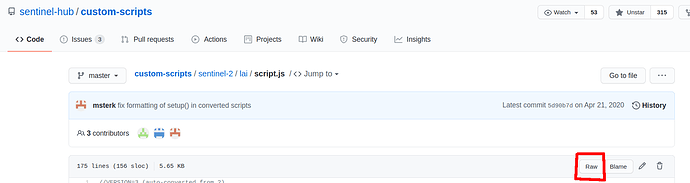

I suggest to check this example.

That said, if I modify your request a bit:

{

"input": {

"bounds": {

"properties": {

"crs": "http://www.opengis.net/def/crs/EPSG/0/32632"

},

"bbox": [

684569.915276757, 5239875.965672306, 685176.9600969265, 5240483.455006348 ]

},

"data": [

{

"type": "S2L2A",

"dataFilter": {

"timeRange": {

"from": "2019-08-02T11:17:49Z",

"to": "2019-08-02T11:17:49Z"

}

}

}

]

},

"output": {

"width": 61,

"height": 61,

"responses": [

{

"identifier": "bands",

"format": {

"type": "image/png"

}

},

{

"identifier": "bool_mask",

"format": {

"type": "image/tiff"

}

},

{

"identifier": "userdata",

"format": {

"type": "application/json"

}

}

]

}

}

and EVALSCRIPT:

//VERSION=3

function evaluatePixel(samples) {

let val = index(samples.B08, samples.B04);

return {

bands: val,

bool_mask: samples.dataMask

}

}

function setup() {

return {

input: [{

bands: [

"B04",

"B08",

"dataMask"

]

}],

output: [

{id: "bands", bands: 2},

{id: "bool_mask", bands: 1}

]

}

}

it wors for me