Hello!

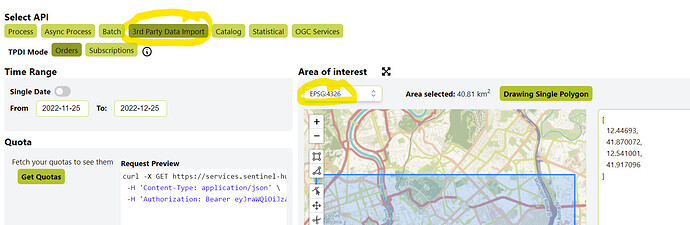

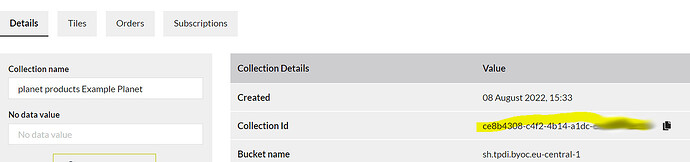

I have downloaded using the request builder Airbus Pleiades imagery.

I wanted to ask few questions to verify that I have done the process correctly.

-

When I have downloaded Pleiades data, I have set the CRS to 4326. Just to assure, that means that the downloaded image is also WGS84, right?

-

Access the image using process API:

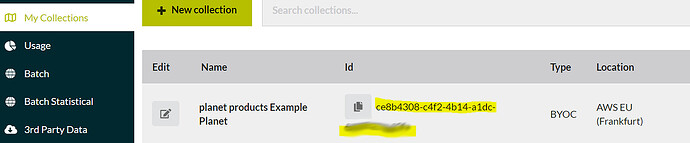

I want to access my purchased images. In order to do that, I’m calling the images from the BYOC and use the match bbox. Is there any way to call images without the geometry of bbox? (it looks something like this):

#evalscript to accesss the pleiades imagery from BYOC data

evalscript_plds = """

//VERSION=3

function setup() {

return {

input: ["B0","B1","B2", "B3","PAN"],

output: { bands: 5 },

};

}

function evaluatePixel(sample) {

return [sample.B0/ 10000, sample.B1/ 10000,sample.B2/ 10000,sample.B3/ 10000,sample.PAN / 10000];

}

"""

def get_bbox_from_shape(shape:gpd.GeoDataFrame,resolution:float):

minx, miny, maxx, maxy = shape.geometry.total_bounds

bbox_coords_wgs84=[minx, miny, maxx, maxy]

bbox = BBox(bbox=bbox_coords_wgs84, crs=CRS.WGS84)

bbox_size = bbox_to_dimensions(bbox, resolution=resolution)

return bbox_size,bbox,bbox_coords_wgs84

#access image using bounding box if the image. The gdf is the gdf of the tiles.

for index, row in gdf.iterrows():

tmp=gpd.GeoDataFrame(gdf.iloc[index]).T

#calculating bbox with the images (not pan-sharpen, I put 0.5 for the pan sharpenning band)

bbox_size,bbox,bbox_coords_wgs84=get_bbox_from_shape(tmp,0.5)

#request the data

request = SentinelHubRequest(

evalscript=evalscript,

input_data=[SentinelHubRequest.input_data(data_collection=data_collection, time_interval=img_time)],

responses=[SentinelHubRequest.output_response("default", MimeType.TIFF)],

bbox=bbox,

size=bbox_size,

config=config,

)

img= request.get_data()[0]

is it possible to do that without define geometry?

- Regard CRS - I’m a bit confused as I use metric units in the get bbox function and also when I request the image, though image is in WGS84 (non-metric). I get the results on the correct place, however, I’m confused because it’s metric

(the function for bbox) :

##calculate bounding box for geometry - taken from sentinel-hub tutorial

minx, miny, maxx, maxy = shape.geometry.total_bounds

bbox_coords_wgs84=[minx, miny, maxx, maxy]

bbox = BBox(bbox=bbox_coords_wgs84, crs=CRS.WGS84)

bbox_size = bbox_to_dimensions(bbox, resolution=resolution)

return bbox_size,bbox,bbox_coords_wgs84

I am worried that this influence my final results.

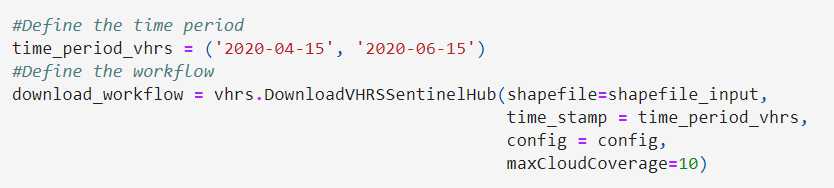

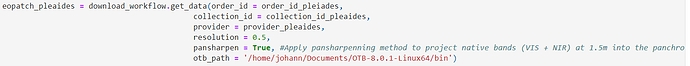

- Pan sharpening :

Based on the tutorial and this question , I have been using the following evalscript to get pan sharpened image:

#evalscript to accesss the pleiades imagery from BYOC data. the units are repflectance (/10000)

evalscript_PAN_plds = """

//VERSION=3

function setup() {

return {

input: ["B0","B1","B2", "B3","PAN"],

output: { bands: 4 },

};

}

function evaluatePixel(samples) {

let sudoPanW = (samples.B0 + samples.B1 + samples.B2+samples.B3) / 4

let ratioW = samples.PAN / sudoPanW

let blue = 2.5*samples.B0 * ratioW

let green = 2.5*samples.B1 * ratioW

let red =2.5* samples.B2 * ratioW

let nir=2.5*samples.B3 *ratioW

return [blue/10000, green/10000, red/10000, nir/10000]

}

"""

before:

the pan band:

after pan:

as you can see, it seems like there are some pixels that weren’t pan sharpennd. Do you have any idea why that could happen?

(Also, it has issue with the colors but that for another post ![]() )

)

Thanks in advanced