hello everyone

I’m trying to execute the same example of slovenia but now with sentinel 1A data .

I try this configuration as you tell me :

s1_evalscript = """

//VERSION=3

function setup() {

return {

input: [{

bands:["VV", "VH", "dataMask"],

metadata: ["bounds"]

}],

output: [

{

id: "VV",

bands: 1,

sampleType: "FLOAT32",

nodataValue: NaN,

},

{

id: "VH",

bands: 1,

sampleType: "FLOAT32",

nodataValue: NaN,

},

{

id: "MASK",

bands: 1,

sampleType: "UINT8",

nodataValue: 0,

}

]

};

}

function evaluatePixel(samples) {

return {

VV: [samples.VV],

VH: [samples.VH],

MASK: [samples.dataMask]

};

}

"""

s1_processing = {

"backCoeff": "GAMMA0_TERRAIN",

"orthorectify": True,

"demInstance": "COPERNICUS_30",

}

s1_input = SentinelHubEvalscriptTask(

features={

FeatureType.DATA: {'VV', 'VH'},

FeatureType.MASK: {'MASK'}

},

evalscript=s1_evalscript,

data_collection=DataCollection.SENTINEL1,

resolution=10,

time_difference=datetime.timedelta(minutes=120),

aux_request_args={'processing': s1_processing},

max_threads=5,

)

`

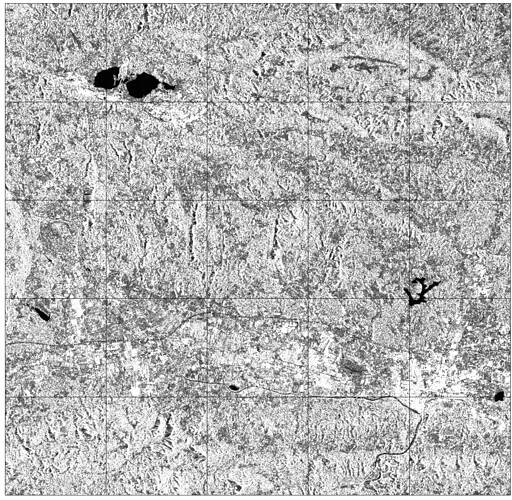

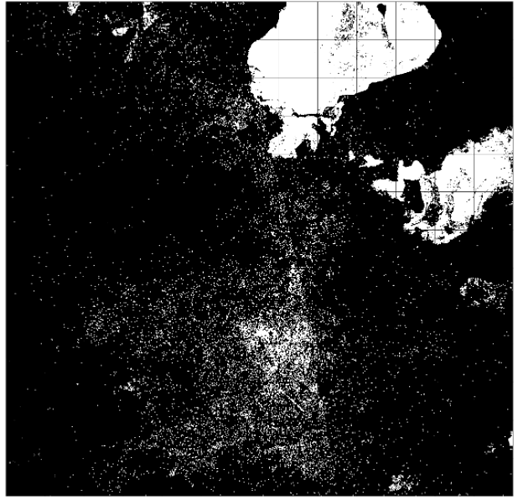

Now after the download of images , I would like to visualize an image in the exact date .

In slovenia’s case we have these line of code :

fig, axs = plt.subplots(nrows=5, ncols=5, figsize=(20, 20))

date = datetime.datetime(2019, 7, 1, tzinfo=dateutil.tz.tzutc())

for i in tqdm(range(len(patchIDs))):

eopatch_path = os.path.join(EOPATCH_FOLDER, f'eopatch_{i}')

eopatch = EOPatch.load(eopatch_path, lazy_loading=True)

dates = np.array(eopatch.timestamp)

closest_date_id = np.argsort(abs(date-dates))[0]

ax = axs[i//5][i%5]

ax.imshow(np.clip(eopatch.data['BANDS'][closest_date_id][..., [2, 1, 0]] * 3.5, 0, 1))

ax.set_xticks([])

ax.set_yticks([])

ax.set_aspect("auto")

del eopatch

fig.subplots_adjust(wspace=0, hspace=0)

So how can I do to adapt this code to my example and visualize the radar image?

Any help please !